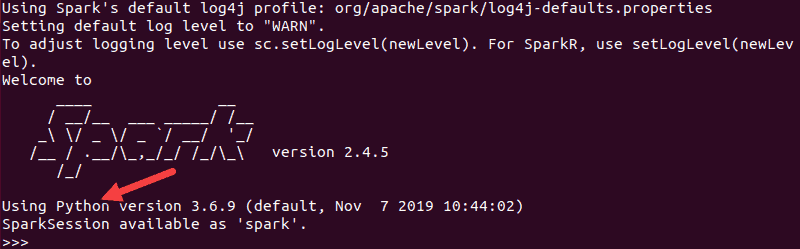

You’ll see something like this: Welcome to Run Python Spark Shell $ /usr/local/spark/bin/pyspark $ sudo nano /usr/local/spark/conf/spark-env.shĪdd these lines: export SPARK_MASTER_IP=127.0.0.1 $ sudo cp spark-env.sh.template spark-env.sh bashrc file: # Set SPARK_HOMEĮdit Spark config file: $ cd /usr/local/spark/conf Or try :help.ĭownload the Apache Spark file, # extract folderĪdd $SPARK_HOME as environment variable for your shell, add this line to the.

#Install pyspark on ubuntu 18.04 install#

Step #2 Install Scala $ sudo apt-get install scala bashrc file: # JAVAĮxport JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64 OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)Ĭheck if JAVA_HOME is set echo $JAVA_HOME In addition, Spark needs to be installed and configured RequirementĬheckout if java is installed $ java -version Java 8 and Scala 2.11 are required by GeoTrellis. PySpark itself is a Python binding of, Spark, a processing engine available in multiple languages but whose core is in Scala. Much of the functionality of GeoPySpark is handled by another library, PySpark (hence the name, GeoPySpark).

GeoPySpark can do many of the operations present in GeoTrellis. GeoPySpark allows users to work with a Python front-end backed by Scala.

GeoPySpark is a Python bindings library for GeoTrellis.

It aims to provide raster processing at web speeds (sub-second or less). GeoTrellis is a Scala library that use spark to work with larger amounts of raster data in a distributed environment. This post describe my experience on installing GeoPySpark on Ubuntu 18.04.1 LTS.

0 kommentar(er)

0 kommentar(er)